Programmed Oppression

*This post has been written as part of my journey as a Ph.D student through University of Regina

Critical theory, the legacy of the theoretical work of the Frankfurt School, is centered on changing the circumstances of the oppressed. Giroux (2009) explains that critical theory in education, “…enables educators to become more knowledgeable about how teachers, students, and other educational workers become part of the system” that is emphasized through organizational practices, messages, and values; often referred to as the hidden curriculum. In the realm of educational technology, the pervasive school of thought has seen the inclusion of technology in the classroom as a hierarchy where those with increased access to devices, efficient broadband speeds, and prominent levels of classroom use are seen as more advanced than those without (Zhao, Gaoming, Lei, & Wei, 2016). While this mindset would lead one to believe that the oppressed are those without consistent access to educational technology it cannot be ignored that the nature of technology itself has been programmed in a manner that clearly marginalizes and even harms specific groups of people.

When examining the role critical theory can play in educational research, Giroux identified three central assumptions drawn from the positivist perspective (2009). The first looks at schools as a force to educate the oppressed about their situation and provide context as to where their group aligns within the hierarchy of oppressed versus oppressor. Education on how to effectively articulate oppression, the second assumption, requires in-depth analysis of one’s situation so that the cultural distortions of the oppressors are removed from the conversation. Lastly, education needs to establish motivational connection where the desires and needs of the marginalized population are identified and a vision for the future is established. With these assumptions in mind, technological development needs to evaluate not only their product(s) but the teams behind the product. What are the demographics of their programming teams? What perspectives are absent from the development team? What bias has been written into the code of the software and what potential effect could it have on end users? There is a hidden curriculum embedded within technology that needs to be openly discussed at all levels of education so that we can work together on changing the circumstances of the oppressed. This work will provide the reader with an overview of the current demographics, and potential biases, of global ICT development with a highlight on the Canadian market. A critique of the identified concerns will be shared as well as actionable items for educators as identified in the existing research. An appendix of additional resources is also included to help review educational software and assist stakeholders in their selection of programs that cause the least amount of harm.

ICT Development Demographics

Many of the prominent programs that our students interact with, including search engines and social media, have been programmed by teams that overly represent Caucasian cis men. Leavy (2018) argues that, ‘if that data is laden with stereotypical concepts of gender and culture, the resulting application of the technology will perpetuate this bias”. So, what does the data show when it comes to the demographics of the ICT field and the potential biases associated with the majority groups?

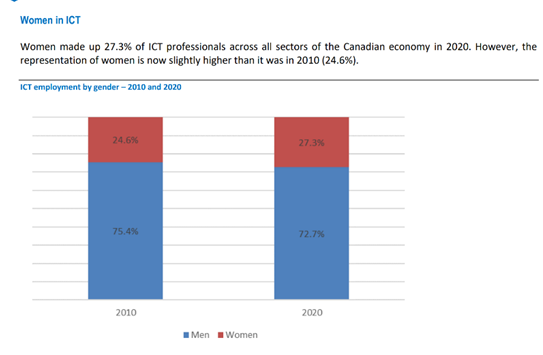

Identified Gender. At a global scale, individuals identifying as women make up 26.7% of total ICT professionals with Canada closely aligning at 27.3% (Howarth, 2022)(Digital Economic Review, 2021). This is a slowly growing field with Canadian statistics showing an increase from 24.6% over the past decade, as shown in Figure 1 from the 2021 Digital Economic Review.

Figure 1. Women in ICT

This trend is supported in practice in ICT-related organizations such as the Manitoba Association of Educational Technology Leaders (MAETL) whose membership reflects 22.2% female-identifying individuals (MAETL, 2022) as well as the Manitoba Association for Computing Educators (ManACE) whose board reflects 20% female-identifying members (ManACE, 2022).

Age. While employment in the ICT sectors is steadily increasing across the globe, research indicates that youth, identified as those aged 15-29 years, have the same likelihood of being employed in this field as their 30+ year old counterparts (International Labour Organization, 2022, p. 123). At the Canadian level, youth employment for the same age range has experienced a slight overall decrease over the past ten years shifting from representing 7.6% of the country’s ICT professionals down to 7.3% in 2021 (Digital Economic Review, p. 16)

Diversity. Within Canada, immigrants display a steady rise representing 38% of total ICT professionals with a 1% increase trend each year for the past decade (p. 17). When it comes to Indigenous individuals, this demographic only represents 1.2% of Canada’s ICT sector despite representing the fastest growing population in the country (Indigenous Supply, 2021, p. 5). This key demographic has been identified as an important talent pool for upcoming ICT sectors at both the Canadian and global scales with the 2017 World Telecommunication Development Conference identifying multiple resolutions on the topic including,

“the necessity of continuing to foster the training of indigenous technicians on the basis of their cultural practices and technological innovation solutions, while at the same time ensuring the availability of resources and spectrum to support the development and sustainability of telecommunication/ICT networks operated by indigenous peoples”

p. 421

Potential Biases. With these statistics in mind, we can arrive at a generalized conclusion that the ICT sector is represented by primarily those who: identify as male, are aged 30 years or older, and stem from colonial roots. Friedman and Nissenbaum remind us that common software biases cater to the lived experiences of the majority population with potential gaps being found in areas such as sexual orientation, class, gender, literacy levels, handedness, and physical disabilities (1996, p. 343). The result of these biases results in user experiences, search findings, photo inclusion, and ads that align with the perspective of the programmers and end users who feel that they are not represented in society.

Software Biases in Practice

Perhaps the most viral example of software bias was brought to the forefront in 2009 by Wanda Zamen in a YouTube video entitled, “HP computers are racist”, that boasts over three million views. Wanda, who has white skin tone, provides a comedic anecdote regarding the face tracking software of her Hewlett-Packard (HP) computer as it seamlessly tracks her movement around her workspace while failing to recognize her coworker Desi on multiple occasions; the primary difference being that Desi has a black skin tone. While the video is presented comedically it clearly highlights the software bias as it relates to the programmed concept of skin tone in facial recognition software. While we are unable to identify the demographics of this specific software development team, Sandvig et. al argue that for this failure to have occurred HP would have had to clearly identify a definition of skin colour as well as what is not considered skin colour (2016, p. 4973-4975). Furthermore, for this error to be present for public end users of the software we can conclude that HP’s testing team did not include participants from a diverse range of skin tones. A simple oversight? Or indicative of a systemic bias prevalent throughout multiple HP departments?

A secondary example of software bias can be found within student information systems (SIS). These programs provide a digital interface to record and manage student information including demographics, caregiver contacts, scheduling, assessment results, behavioural anecdotes, and more. Often tied to formal reporting documents, and pulled from data provided by birth records, there is often a legal requirement that a student’s name is recorded as it appears under The Vital Statistics Act (Manitoba Education, 2012, p. 11). However, for transgender students identifying under a name other than their birth name, this requirement generates a potentially harmful situation where the student is forced to confront their dead name during attendance, receiving a report card, or logging into student software that is often synchronized to the SIS. Harrington and Quentilla outlined their calls for action in their 2020 Transgender Inclusion and Equity Program which included student registration requirements:

“Class website and rosters should indicate student’s lived name instead of their dead name when it comes to attendance of class and identifying who is who. Their dead name should not be indicated anywhere. Exposure of dead name can lead to misgendering and non-consensual outing of the person. Not only that, but it can lead the student to feeling singled out and exposure to transphobia.”

p. 2

Concern arises when SIS’ fail to offer the ability for users to input a secondary name sometimes categorized as a preferred name or nickname; reinforcing a cisgender assumption.

The assumption of ability is a further example of software bias, with a programs’ functionality including accessibility features such as closed captioning as a prime example. Hearing loss can affect up to one in four students with the use of closed captioning being identified as a beneficial aid for all learners, not just those within the deaf and hard of hearing community (DHH) (Scoresby et al, 2022, p. 5). However, this prevalence does not equate to immediate inclusion of accessibility features within software. Popular streaming site, Netflix, only added closed captioning to its shows and movies following legal action by the National Association of the Deaf (NAD, 2011). While successful in their pursuit, with 100% of Netflix offerings featuring closed captioning by 2014, it is unreasonable to reactively adjust software bias in response to minority advocacy. McDonnell (2022, p. 4) argues that a readjustment towards, “more accessible norms must include action on the part of majority-hearing creators”. This proactive approach is echoed by Friedman and Nissenbaum who advocate for bias critique at the start of the design process with a call to action for software designers to have a solid understanding of the applicable biases in their realm of focus (2016, p. 344).

Actionable Items for Educators

Giroux (2009) highlights that students internalize the cultural messaging of the educational structure through every aspect of their experience, including elements that may be considered “insignificant practices of daily classroom life”. For example, the Microsoft Office 365 language suite boasts over 60 languages, none of which are Indigenous languages for the treaty territories of Manitoba (Microsoft, 2022). What message does this send to students? That Indigenous languages are not valued? That Indigenous peoples cannot be involved in technology development? Educators are not only tasked with how to integrate technology into their curricular programming but also with the evaluation and selection of technological programs that are not perpetuating a hidden curriculum catering to a particular demographic.

Identifying Types of Software Bias: The first step towards effective evaluation is an understanding of the multiple ways in which bias can be present within software. Friedman and Nissenbaum outline three types of software bias: preexisting, technical, and emergent (p. 332). A preexisting bias is grounded in the social practices and attitudes of the software development team; arguably present in the HP issue identified by Wanda. A technical bias is related to limitations of the software and lack of consideration of features such as the inability to add a preferred name or addition of closed captioning. Finally, an emergent bias arises in the context of use such as a mismatch in purpose when students are provided with software designed for the corporate sphere. A full review of the three types of software bias, with examples, is outlined in Appendix A.

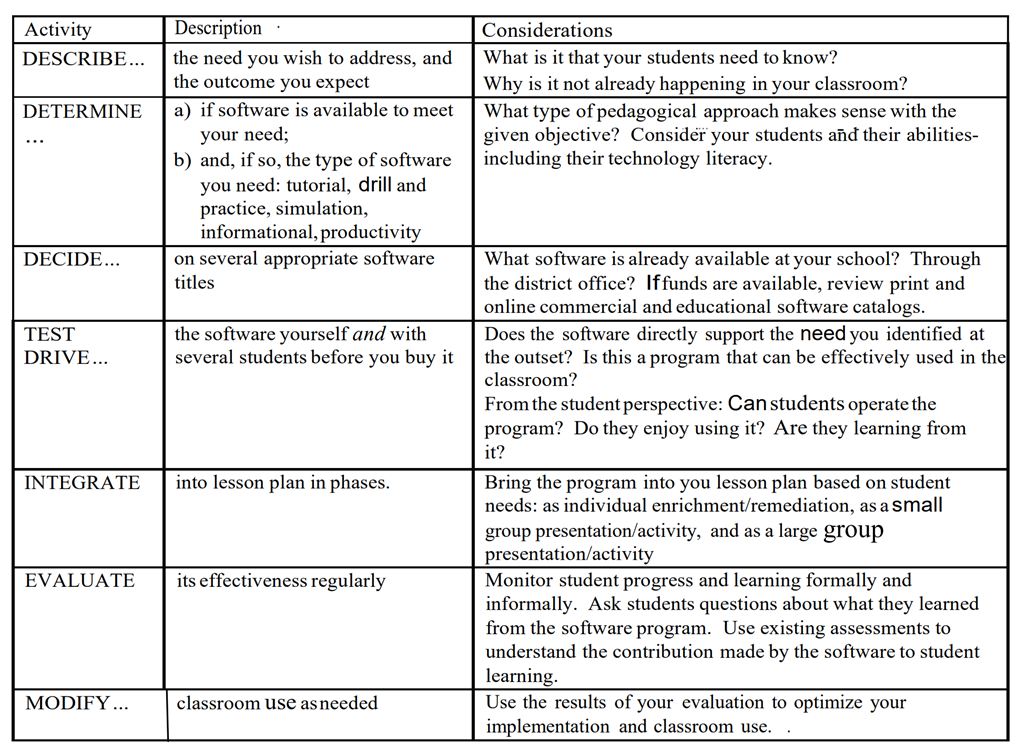

Critical Evaluation of Software: The critical evaluation of software by educators can be a monumental task, especially in teaching environments that are unable to offer supporting technological positions such as Coordinators of ICT or Digital Literacy Coaches. In response to this, the development of evaluation models such as the 4DIEM Model by Marshall and Hillman (2000) seek to provide educators with a platform to make more informed decisions about the software they implement. In this model, educators are asked to not only think critically about the proposed software before it is used with students but to also participate in ongoing evaluation of the experience as it is being used. The 4DIEM Model encourages educators to modify their use of the software based on the results they are seeing with their subset of students and unique teaching context. An overview of the 4DIEM Model, with examples, is included in Appendix B.

Conclusion

Many of the prominent programs that our students interact with, including search engines and social media, cater to the lived experiences of the majority population with potential gaps being found in areas such as sexual orientation, class, gender, literacy levels, handedness, and physical disabilities (Friedman and Nissenbaum, p. 343). While digital equity and access to technology continues to be a central topic in the education world it cannot be assumed that the availability of these tools will bridge the gap between the “haves and have nots” of current society. The hierarchical approach to technology inclusion fails to address the systemic oppression that is written into the very code of the programs themselves. The hidden curriculum embedded within technology use needs to be openly discussed at all levels of education so that we can work together on changing the circumstances of the oppressed.

References

Cutean, A. (2017). ICTC releases report on Indigenous participation in Canada’s digital economy. Information and Communications Technology Council (ICTC). Retrieved from: https://www.ictc-ctic.ca/ictc-releases-report-indigenous-participation-canadas-digital-economy/

Friedman, B. & Nissenbaum, H. (1996). Bias in computer systems. ACM Transactions on Information Systems, 14(3), 330-347.

Giroux, H.A. (2009). Critical theory and educational practice. In A. Darder, M.P. Baltodano, & R.D. Torres (Eds.), The critical pedagogy reader (p. 27-51). Routledge.

Harrington, B. & Quentilla, A. (2020). Transgender inclusion and equity program.

Herron, C. Ivus, M. Digital economy annual review 2020. Information and Communications Technology Council (ICTC), July 2021, Ottawa, Canada.

ITU. (2017). Final report. World Telecommunications Development Conference (WTDC-17). Retrieved from: https://www.itu.int/en/ITU-D/Conferences/WTDC/WTDC17/Documents/WTDC17_final_report_en.pdf

Leavy, S. (2018). Gender bias in artificial intelligence: the need for diversity and gender theory in machine learning. In Proceedings of the 1st International Workshop on Gender Equality in Software Engineering (GE ’18). Association for Computing Machinery, New York, NY, USA, 14–16. DOI: https://doi.org/10.1145/3195570.3195580

Manitoba Association for Computing Educators. (2022). www.manace.ca

Manitoba Association of Educational Technology Leaders. (2022). www.maetl.mb.ca

Manitoba Education. (2012). Manitoba pupil file guidelines. Retrieved from: https://www.edu.gov.mb.ca/k12/docs/policy/mbpupil/mbpupil.pdf

Marshall, J., & Hillman, M. (2000). Effective curricular software selection for K–12 educators. In Society for Information Technology & Teacher Education International Conference (p. 101-106). Association for the Advancement of Computing in Education (AACE).

McDonnell, E. (2022). Understanding social and environmental factors to enable collective access approaches to the design of captioning technology. 24th International ACM SIG ACCESS Conference on Computers and Accessibility (ASSETS ’22). https://doi.org/10.1145/3517428.3550417

Microsoft. (2022). Languages and products supported by Immersive Reader. Retrieved from: https://support.microsoft.com/en-us/topic/languages-and-products-supported-by-immersive-reader-47f298d6-d92c-4c35-8586-5eb81e32a76e

National Association for the Deaf. (2022). NAD Files Disability Civil Rights Lawsuit against Netflix. Retrieved from: https://www.nad.org/2011/06/16/nad-files-disability-civil-rights- lawsuit-against-netflix/

Sandvig, C., Hamilton, K., Karahalios, K., & Langbort, C. (2016). Automation, algorithms, and politics | When the algorithm itself is a racist: diagnosing ethical harm in the basic components of software. International Journal of Communication, 10(19). Retrieved from https://ijoc.org/index.php/ijoc/article/view/6182/1807

Scoresby, K., Wallis, D., Huslage, M., & Chaffin, M. (2022): Teaching note—Turn on the CC: Increase inclusion for all your students. Journal of Social Work Education, DOI:10.1080/10437797.2022.2062510

wzamen01. (2009, December 10). HR computers are racist [Video]. YouTube, HP computers are racist

Zhao, Y., Gaoming, Z., Lei, J., & Wei, Q., (2016). Never send a human to do a machine’s job: Correcting the top 5 edtech mistakes. Thousand Oaks, CA: Corwin.

Appendix A – Categories of Bias in Computer System Designs

Friedman, B. & Nissenbaum, H. (1996). Bias in computer systems. ACM Transactions on Information Systems, 14(3), 334-335

Appendix B: 4DIEM Model of Software Evaluation

Marshall, J., & Hillman, M. (2000). Effective curricular software selection for K–12 educators. In Society for Information Technology & Teacher Education International Conference (p. 101-106). Association for the Advancement of Computing in Education (AACE).

Kirsten, reading your work ‘almost’ makes me miss life in academia. It is inspiring following your journey.